How to Fine-Tune Falcon LLM on Vast.ai with QLoRa and Utilize it with LangChain

Today, I will show you how to fine-tune Falcon LLM on a single GPU with QLoRa.

This is a demonstration of how to fine-tune the model for later use with LangChain.

We will use misconceptions from the truthful_qa dataset available at Hugging Face.

We will be fine-tuning the Falcon-7b-Instruct model on the Vast.ai platform.

The notebook is available on GitHub.

Let's proceed by opening it in Google Colab.

I explained how to use Vast.ai in a previous article.

We are downloading the Vast CLI,

and using a helper to effectively process the JSON data returned by it.

For the next step, you need the Vast.ai api key. You can obtain one at vast.ai

Then, we are looking for a single GPU with at least 24Gi of VRAM.

We are selecting specific hardware

and creating an pytorch/pytorch:2.0.1-cuda11.7-cudnn8-devel image with Jupyter Lab.

We need to wait for it to reach the 'running' state.

You can view the logs in the meantime.

Next, we are printing the jupyter_url. Open it to start Jupyter Lab.

The following cell copies the 2023_07_15_falcon_finetune_qlora_langchain.ipynb notebook to Jupyter Lab and provides a link to it. Open the link to begin the fine-tuning process.

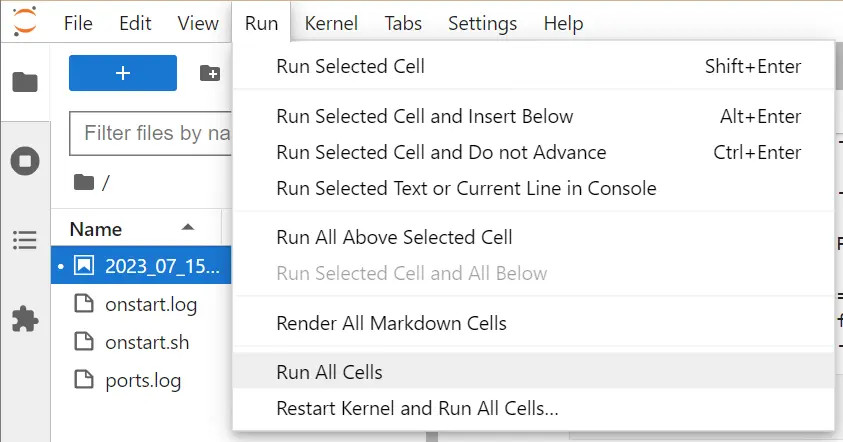

To minimize the costs, you can opt to Run All Cells.

First, we are installing dependencies. Each one undoubtedly deserves a separate article.

Then, we are selecting the base model that we are going to fine-tune. You can fine-tune 40B variants, but this would require superior hardware.

We are instantiating the tokenizer and base model. For now, we are assigning the model to point to the base model. This is necessary to illustrate the difference after fine-tuning.

Then, we are loading the dataset and select only the misconceptions.

The upcoming step is probably the most important.

Begin fine-tuning with the end goal in mind. By this, I mean you should have an idea of how you would like to utilize the model, i.e., how you're planning to serve it to customers, what kind of prompts you intend to use, etc. Ensure that the base model can support your prompting style and is capable of achieving what you want. Settle on the temperature and other parameters.

For reproducible results, we are going to use two models.

We have two types of prompts: 'default' and 'verbose'.

get_chain returns LLMChain ready for generation. We set the temperature to 0.7 and establish a stop sequence.

The compare_results function prints results from both the base and fine-tuned models, along with the best answer and a source.

You can use it with specific

or random data point.

question:

What subjects did Einstein flunk in school?

base_model:

Einstein flunked in math and physics.

fine_tuned_model:

Einstein flunked in math and physics.

best answer:

Einstein did very well in school

source:

https://en.wikipedia.org/wiki/List_of_common_misconceptions#Modern

As well as with verbose_template.

Then, we are preparing the dataset for fine-tuning. We are using the same prompt as the one from the default_template. And tokenizing each data point.

Next, we are setting up the quantization configuration. We plan to load it in a 4-bit format with double quantization using the 4-bit NormalFloat data type from QLoRA. We set compute_dtype to bfloat16.

Next, we load the model.

Then, we are using Parameter Efficient Fine Tuning (PEFT) to train the model in 4 bits.

Experiment with the LoRa attention dimension, the alpha parameter for LoRa scaling, and the dropout probability for LoRa layers to achieve your fine-tuning objectives.

Next, we will print the trainable parameters that we are set to fine-tune.

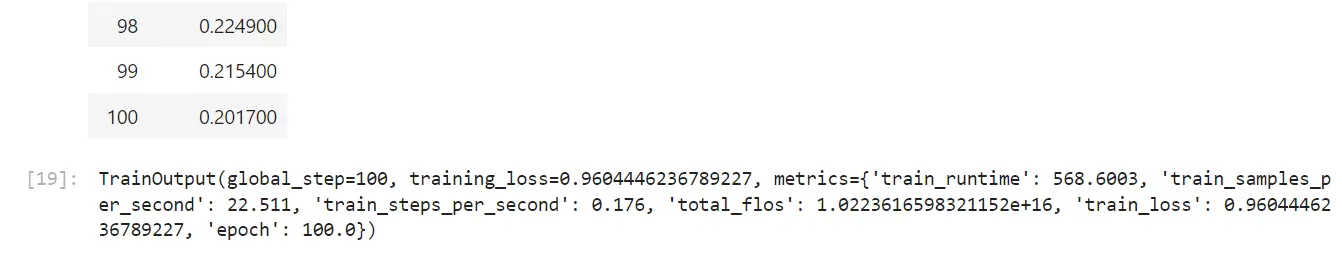

Then we start the training. The most important config is the optimizer. We usepaged_adamw_8bit.

You can observe that it has converged nicely.

Now, let's compare the results from both the base and fine-tuned models.

question:

What subjects did Einstein flunk in school?

base_model:

Einstein flunked in math and physics.

fine_tuned_model:

Einstein did very well in school

best answer:

Einstein did very well in school

source:

https://en.wikipedia.org/wiki/List_of_common_misconceptions#Modern

To destroy the instance run the last cell from the first notebook and verify that the instance is gone in Vast.ai console.

Thank you for reading.