Build your own AI-Powered chat app with Next.js and LangChain

Today I will show how to build an AI-powered chat application with Next.js and LangChain.

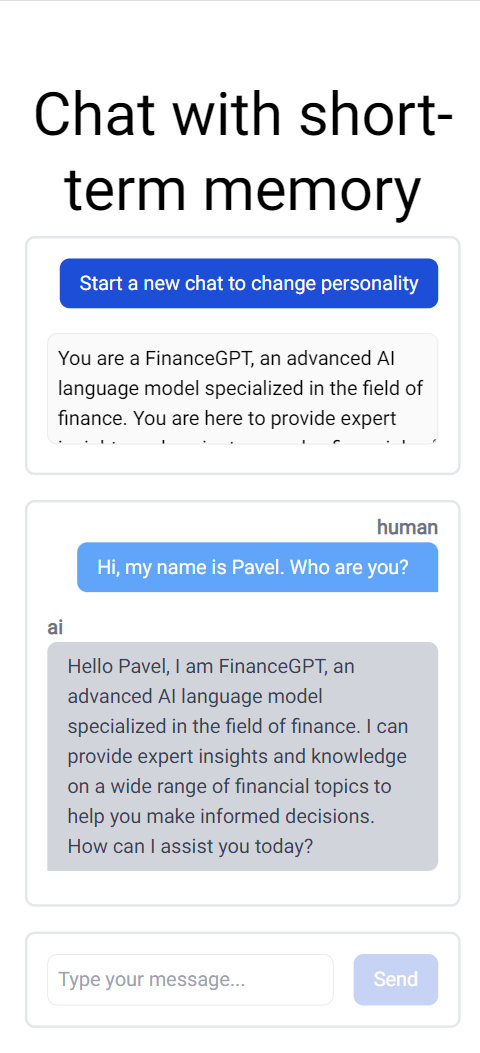

App features:

- You could set an AI persona for your chat

- You could send a message and get a streaming response from LLM

- It auto-scrolls the received message into view.

- The chat model has a memory of the current conversation.

- You can start a new chat to change an AI persona.

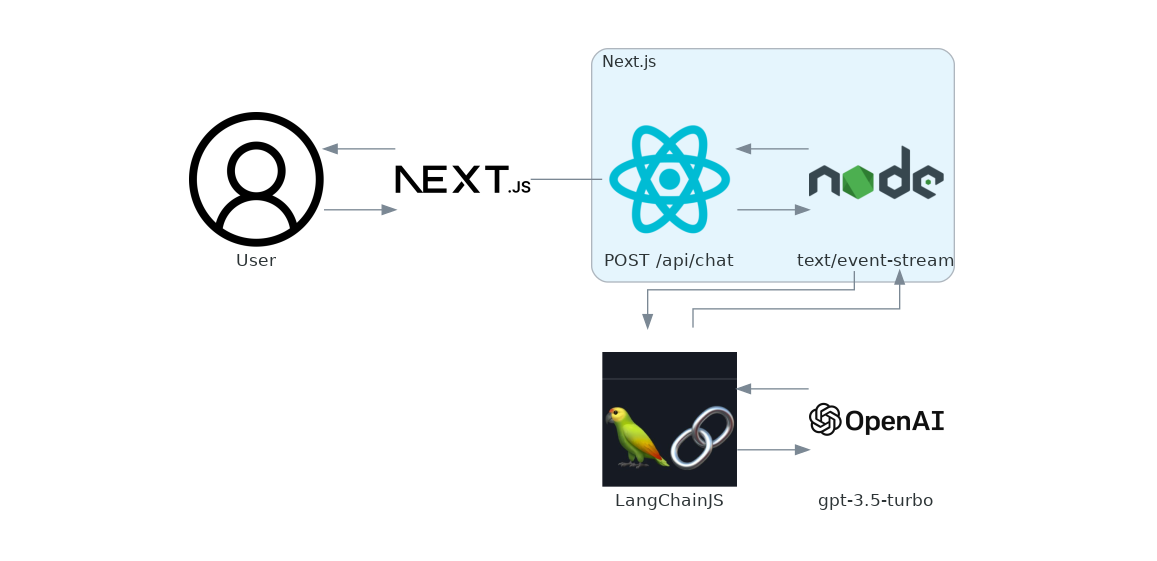

User input gests POSTed to /api/chat route where Prompt and ConversationChain are created. Then, the streaming response from the model returned as Server-sent events.

I was considering two options to get streaming from LangChain. Server-sent-events and WebSockets. SSE, works on top of HTTP supporting one-way communication. Websockets is a good choice for a production app. Its protocol supports bidirectional communication, but it will make your backend stateful.

To set up a project, initialize the Next.js project, install langchain, and download all code snippets run:

npx https://gist.github.com/shibanovp/f9b9631c9f33250a663b3d7fc6be05df chat

(Optional): Take a look at how this is working in this gist.

Set your OPENAI_API_KEY in .env.local

The Home server-side component renders client-side <Chat />.

Chat component sends controlled inputs to POST /api/chat route.

When we typed a message and submitted a form, we are going into handleSubmit.

After preventing a default action send a POST request to api/chat route with persona, input, and messageHistory. We get back Server-sent events.

Then we are reading chunks of data from it and immediately set the output state which gives us a nice typing effect.

ChatMessage component displays individual messages.

POST /api/chat route

We get the request body object and construct the pastMessages array and call callChan function with user input. It returns ReadableStream, which we pass to the Response constructor alongside with headers necessary for SSE. Note that we are not depending on a concrete Chat LLM. We are using OpenAI, but another option with ChatAnthropic is available.

Let us look at how we are getting output from the chat model in callChain.

First, we create a transform stream, it's readable returned from the function. Then we create ChatOpenAI model with params for the model. By default, we are using gpt-3.5-turbo, but you can set gpt-4.0 with modelName parameter.

The most important part is a callbackManager where we encode a token and write it to the stream.

Then we create a promptTemplate for configuring the chatbot. Next, initializing BufferMemory for short-term memory. In a production app, you should consider pulling chatHistory and persona from the database.

And at last, we create a ConversationChain and call with user input. It is important to not await the returned promise.